A long time ago, back in 2023 AGI was just around the corner. LLMs had exploded into the public consciousness and were coming for your jobs. What this meant from a practical side was that in order to be a respectable company worthy of investor attention and the continued confidence and faith of your customers, you needed to make friends with AI!

This most often manifested in a company allowing it's customers to interact with whatever platform they already knew an loved through conversing with a digital avatar of an all knowing and all powerful being living somewhere in the cloud. This omniscient entity would understand the user on a deeper level, know them better that they even knew themselves and help them on their quest to bring home that sweet sweet shareholder value. Also known as a chatbot. This is the story of how I built one for a client.

So how does a chatbot work?

Despite the mystical aura around AI, a chatbot like most interaction with a LLM based artificial "intelligence" is the result of composing a few simple building blocks. In this post we sadly omit discussion about the internals of the most interesting building block - the LLM itself. Building one from scratch is something big corporations, afforded by their armies of researchers, spend on the order of tens of millions of dollars to build and there's no shortage of incredibly high quality material (mostly) freely available:

- Andrej Karpathy's "Neural Networks: Zero to Hero" playlist

- 3Blue1Brown - "Neural Networks" playlist

- https://sebastianraschka.com/teaching/

For the purposes of this post, we (along with the other 99% of people building on top of LLMs) treat the LLM itself as a black box: if we give it some correctly arranged input, magically coherent output comes out from the other end - hopefully.

What is "correctly arranged input"?

On the most fundamental level LLMs take a sequence of text as input and based on that sequence and their internal "knowledge" return another sequence of text as output.

Technically LLMs operate on sequences of tokens, but there's a one-to-one mapping between text and tokens and almost no application utilizing LLMs deals with tokens directly (unless counting them for budgeting purposes, more on that later). For a deep dive into tokenization, check out Andrej's Let's build the GPT Tokenizer

Although a language model itself only deals with a single sequence of text, most real-world applications built on top of the models break up this sequence into logical blocks. You can think of it as a convenient abstraction that LLM providers expose through their APIs. Most APIs define different types of blocks, like system, user and assistant (nowadays also tool_call) and there's some ceremony around how exactly do you place these blocks into an API all, but I believe it is a useful mental model to think about these individual blocks as just string buffers.

an example request to the OpenAI API:

{

"model": "gpt-4o-mini",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Explain quantum computing in simple terms."

}

],

"max_tokens": 150,

"temperature": 0.7

}

On their back end, an LLM provider will take these individual string buffers and based on whatever ceremony will concatenate them together into one large buffer, convert that into tokens and run their language model with that large token sequence as input, eventually returning back the LLMs response sequence wrapped as an assistant message. The main content of this message is guess what? A string buffer!

So if it's just string buffers anyway, why all the ceremony around it? There are practical reasons for segmenting a big buffer into blocks and they mostly derive from the fact that LLMs are fine-tuned with sequences of a specific structure. After seeing millions of such carefully crafted sequences during training, the model is eventually coerced into interpreting stuff in the system prompt block as instructions and that things in the user block should not override the instructions in other blocks in the name of "safety". This mechanism is not fool proof, but it can be useful - at least that's what's being sold by the providers.

Segmenting the input context also has the additional benefit of making it possible to cache parts of the context at different stages.

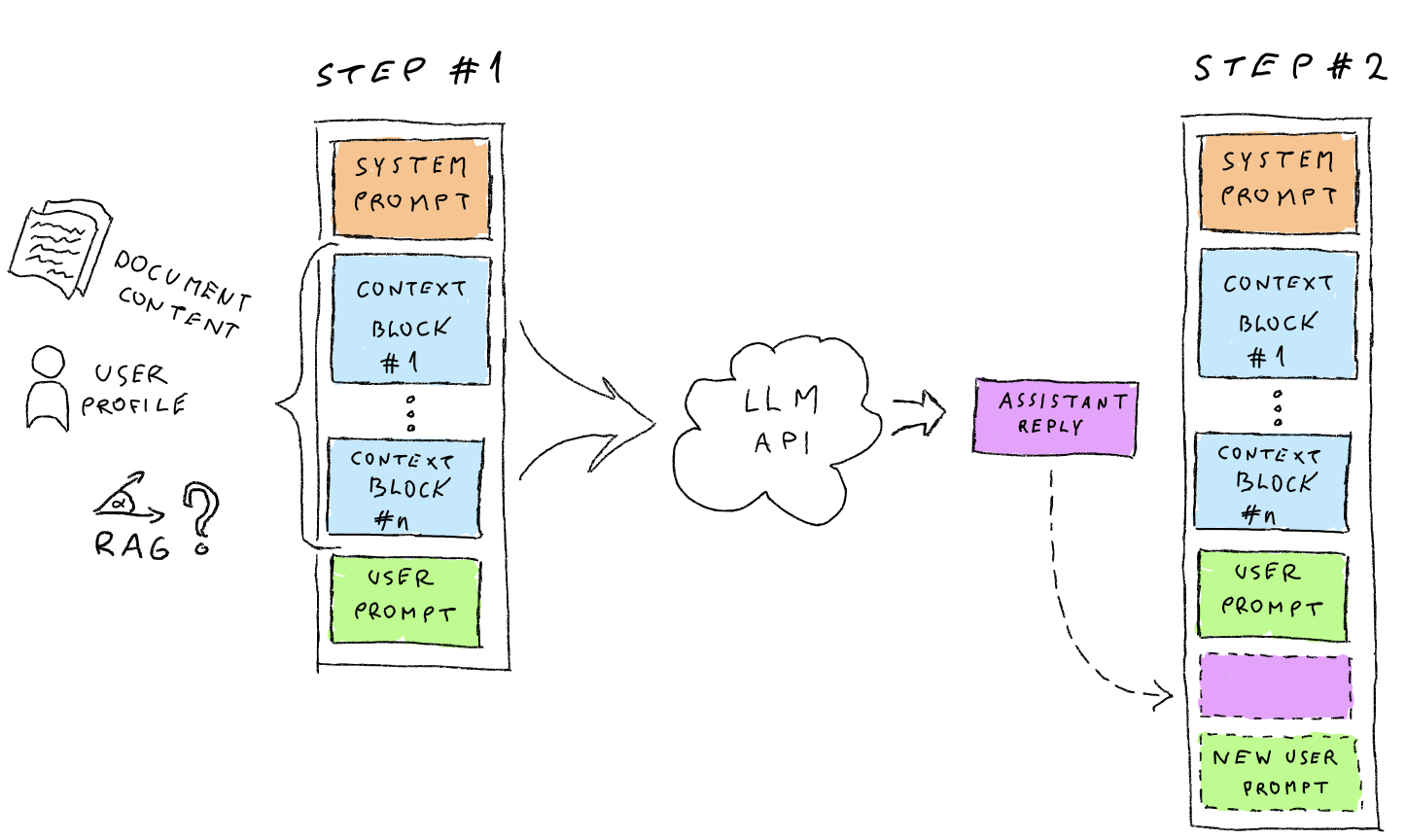

Now, armed with this knowledge, let's map out a typical dialogue flow for a chatbot:

-

We have a user eagerly awaiting their turn to engage with the all knowing wisdom of the oracle that is the chatbot. They type their prompt into an input box.

-

We gather different blocks of context that we believe are necessary for the LLM to pretend to be an expert in the user's particular field of interest. This context can be any piece of information that we can coerce into a string buffer:

- a

systemprompt instructing the LLM to structure it's reply in a specific tone or format - documents or sections of documents

- the user's profile information

- some facts retrieved from a previously built database, based on the semantic similarity to the user's prompt (RAG)

- "proprietary insights"

Managing this additional context properly turns out to be the most difficult part of the entire flow.

- a

-

To the aggregated context from the previous step, we append the block that is the user prompt, wrap it all up with the convention of our particular LLM provider's API and send it off as a request

-

After receiving a reply from the LLM, we display it to the user in a colorful message bubble. Next we let them input another query or prompt, concatenate the LLMs previous reply to our already aggregated context, together with the new prompt from the user and repeat step 3.

The context orchestration part of the described flow is depicted in the following figure:

And that, boys and girls, is how the illusion of a conversation with continuity is created and maintained in a chatbot. In the following sections I'll go over an actual practical implementation of the above conceptual flow and describe some lessons learned while implementing it.

And that, boys and girls, is how the illusion of a conversation with continuity is created and maintained in a chatbot. In the following sections I'll go over an actual practical implementation of the above conceptual flow and describe some lessons learned while implementing it.

A real-world use case

My client was a startup in the executive hiring industry. The main users of their platform were hiring assistants whose job it would be to evaluate candidates for different (usually high level) positions in some organisation or another. For these evaluations the platform aggregated various diverse data sources for each candidate like their results from different psychometric personality assessments, work history, notes from interviews etc. Then they would present insights from this data to the hiring assistant mostly through predefined views and workflows for the assistants to click through, letting them evaluate things like how a particular candidate would fit into a team of other candidates and the like.

To put in another way, these workflows would enable the assistant to make hiring decisions based on some gathered evidence (the various pieces of data collected about each candidate or team). Now as these workflows were rather static and each new nugget of extracted information needed to be statically coded into a workflow, we had the hypothesis that using a chatbot as an interface to the myriad of data pieces already collected would allow the user to discover potentially novel insights and make the entire evaluation process more dynamic. Essentially the hiring assistant could have a dialogue with a virtual assistant, ask questions about a particular candidate or team and the virtual assistant would pull out relevant pieces of information and formulate it's answers based on that.

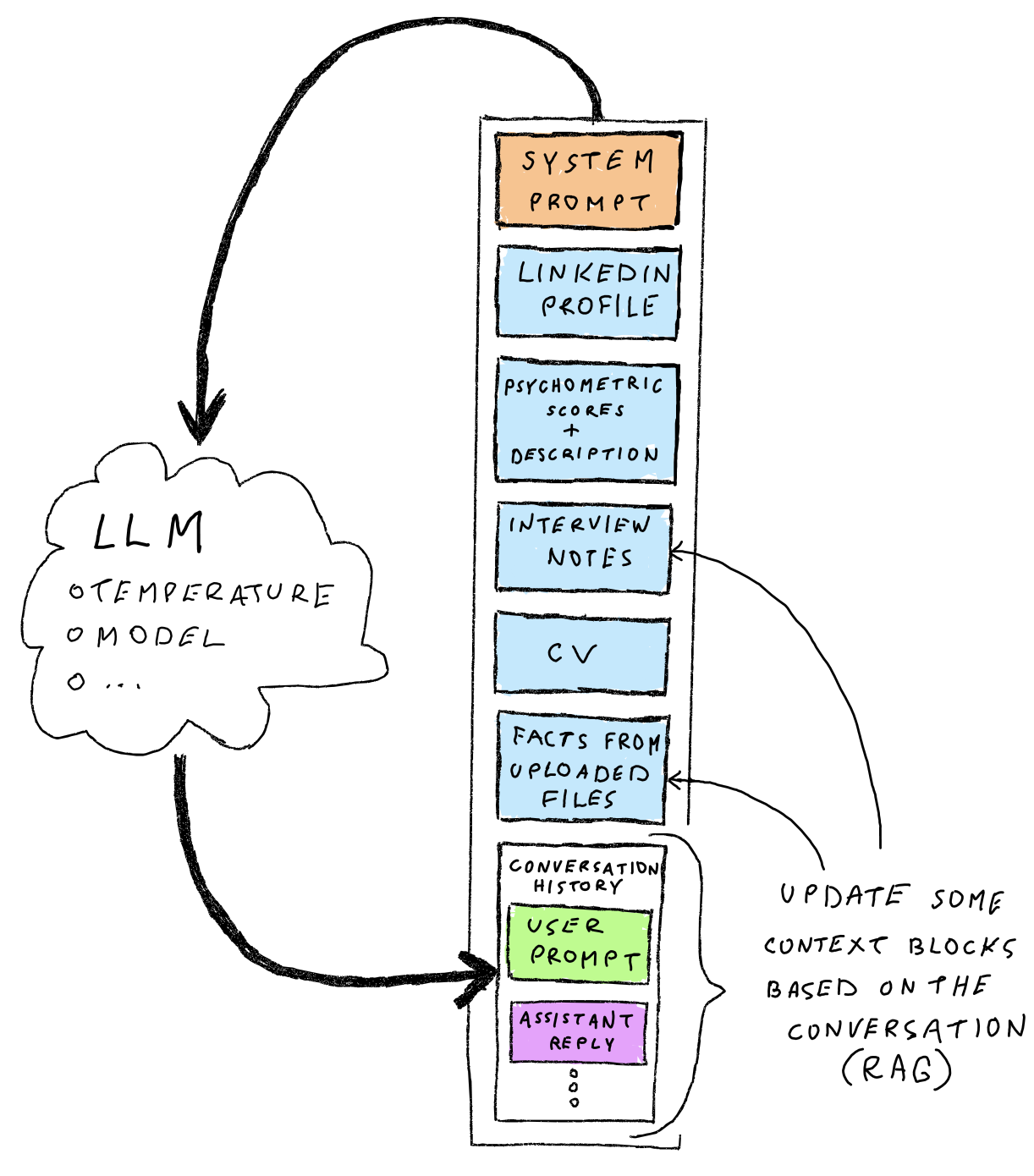

Below you can see what the prompt structure of the first iteration of the assistant's assistant looked like:

Keep in mind that this was before

Keep in mind that this was before tool calls were a thing and no option to upload files directly to any LLM provider though a convenient interface even existed - all the context pieces (and where and when they would be added) needed to me managed manually.

Juggling the moving parts

The term "context engineering" had not been invented yet. There was however no shortage of Youtube tech influencers armed with an endless supply of clickbait-y headlines who tried their darndest to capitalize on teaching people the dark art of "prompt engineering". Narrowly avoiding the flying shrapnel of the hype grenade, we realized quite early on that we wanted the ability to tweak and tune our assistant's assistant in a manner that was more flexible than what mere prompt editing could offer.

As you can see from the above sketch, there were not that many context pieces, that were just prompts, but there were already more than a handful of moving pieces right of the bat. The expectation was that the number of possible things we would want to integrate into the context would only increase as time went by.

An added constraint was that the client didn't have the luxury of a big in-house development team, therefore it was very much preferred if we could somehow avoid minor tweaks in the assistant's context or debugging output errors from becoming something only a developer could do.

Under these needs, wants and constraints "Prompt Editor v1" was born with a grossly understated name. Understated because in addition to facilitating just the editing of some prompts, it provided a wholistic experience to orchestrating different context pieces.

Keep in mind that "Prompt Editor v1" was an internal tool to configure the inner workings of the chatbot that the actual users of the client's platform were going to interact with. The platform itself and the "real" chatbot UI was designed by people called designers and was rather pretty. "Prompt Editor v1" however wasn't much to look at aesthetically, but I like to think it's visual design (or lack thereof) was a testament to it's usefulness - embodying the idea of substance over form.

It provided us with multiple affordances which I'll briefly discuss in the following sections.

A combinatorial explosion of turnable knobs

An LLM is a stochastic beast, meaning that even if you give it the exact same input on different occasions, it rarely returns exactly the same output. On a global level the randomness of it's output can be tuned by the infamous temperature parameter.

But the temperature parameter is hardly the only thing that has an effect on the output.

From instructions in the system prompt to sizes of the different context blocks, hell even the order of context blocks - all of this can have an effect on how coherent the output from the model is relative to the task at hand. Not to mention the fact that most context blocks themselves had tunable parameters deeply tied to the internals of the client's platform. Trying to manage all of these knobs quickly lead to combinatorial explosion.

Not to say that our application was a silver bullet to solving this, but it definitely provided a more structural approach that allowed for way quicker iteration times than changes in code ever could.

Token limits

As mentioned in the technical introduction above, there's rarely a need to deal with tokens directly on the application side, except when you want to make sure you're that you're not overflowing the finite context window of the language model. Keep in mind that this was a time when GPT-3.5 was still a thing and context windows ranged from 4K to 32K tokens max. A couple of large pdf documents and some data from LinkedIn could easily overflow this "small" token limit.

Even now with context windows of the average model being around 100K tokens, it still makes sense to manage the token budget tightly. Not only will you save some precious $-s on your LLM provider bill, but it has technical benefits as well:

- sending less data on every turn means lower latency and a faster user experience - this will start to matter at scale

- LLMs still get confused with large contexts and you will start to notice discrepancies in your outputs (unless you don't monitor your outputs, then you won't notice and have nothing to worry about)

- Some of our context blocks were compiled using an elaborate RAG mechanism. A RAG based context block would order it's internal content sections based on some notion of "similarity" to the conversation so far. Limiting the token size of those context blocks provided for a natural cutoff point: by only including full sections that fit into the token limit of that particular block, we always included only the most relevant pieces of information by default without having to constantly tune any "relevancy cutoff" parameter manually.

Turn an engineering problem into a business problem

This turned out to be a big one! There was a widely held belief outside the tech sector (and in some places even inside it) that anything touching AI is the domain of either PHDs or wizards or at least engineers. Our tool made possible a completely new workflow inside the company: it enabled non-engineers to build and experiment with creating different much more dynamic workflows in their own expert domain.

Although engineering effort was still required to develop the individual context blocks that integrated into the customers existing platform, orchestrating those blocks and combining them in novel ways was entirely done by people on the business side.

After all, who would be better at judging the responses of an assistant based on some gathered evidence than the people who have years of experience analyzing the same evidence manually?

Reproduce and debug production issues

Debugging is mostly tedious. Debugging issues in a stochastic system where nothing is really guaranteed, can be even more tedious. I believe we got the most dividends with regards to debugging from the fact that "Prompt Editor v1" made it easy to narrow down the source of discrepancies in the output. We could load up any context configuration (either from the client's live or development environments) and interactively walk through any piece of context (you can see from the above clip that as the context for a prompt was being pulled together, the output of different pieces was immediately streamed over websocket to the editor).

This allowed us to quickly narrow down whether issues were caused by missing or inconsistent data or LLM hallucinations or a mixture of both.

Did we reinvent the wheel?

Well, yes and no. Did tools with similar functionality already exist? Absolutely! I would say there were maybe even too many tools available, each possessing a different subset of the features essential for our product. We actually did spend more time and effort than I feel comfortable admitting trying to evaluate and shoehorn a couple of these existing tools and frameworks to fit our purpose, before we came to the conclusion to write our own. In hindsight the experience of dissecting other tools and frameworks probably played a large part in coming up with a suitable architecture for Prompt Editor v1.

Here are some takeaways we distilled from the process of building our own solution:

- Whether you're building a module or service from scratch or trying to incorporate some off-the-shelf tool or framework into an existing system, you have to deal with integration in both cases. There is however a huge difference in the size of the surface area of integration related code between the two options. Choosing to integrate an existing framework means that unless the abstractions used in your existing business domain match up perfectly with the abstractions used by the framework (they won't!), you have to write an additional translation layer between the two. Implementing things from scratch means that you can directly use and model against already existing business domain abstractions. The resulting module or service will also be more tightly integrated with the existing system.

- Use existing tools and libraries as a reference or better yet scavenge them for spare parts, but don't try to cram them into your existing platform as a whole. For example, there's usually no need to write your own PDF loader, but depending on your needs, you might want to write your own abstractions for the structure of a parsed PDF.

- Nobody really knows what are the most optimal abstractions for working with LLMs yet, but everybody is in the process of finding out. Just because a framework is popular, doesn't mean their abstractions are good. There are no "industry standard" abstractions regarding LLMs carved in stone (besides maybe the OpenAI API endpoint specification)

- Write your own debugging tools. Whether using existing framworks or writing your own, you can get a lot of value by streaming some structured outputs from the middle of a running pipeline to some custom UI components over websockets. I might write a separate post about this in the future.

Conclusion

Turns out it was (and still is) possible to build genuienly useful tools with "AI", despite the nauseating hype of LLMs being the hammer for every nail. My client and their clients got a lot of business value out of the context editor tool and the new dynamic workflows it facilitated on their existing platform. So much so that not long after launching the new chat based workflows, their startup got acquired by a much larger enterprise.

The usefulness of this sort of context engineering is by no means limited to only chat based flows. I plan on writing another post in the future on the topic of how to orchestrate different pieces of context in an "agentic flow" setting.